Let ![]() be an n-dimensional vector of random variables.

be an n-dimensional vector of random variables.

For all ![]() , the joint cumulative distribution function of X satisfies

, the joint cumulative distribution function of X satisfies

![]()

Clearly it is straightforward to generalise the previous definition to join marginal distributions. For example, the join marginal distribution of ![]() and

and ![]() satisfies

satisfies

![]()

If ![]() and

and ![]() is a partition of X then the conditional CDF of X_2 given X_1 satisfies

is a partition of X then the conditional CDF of X_2 given X_1 satisfies

![]() latex f_X (\bullet)

latex f_X (\bullet)![]() latex X_2

latex X_2![]() latex X_1

latex X_1![]() latex f_{X_2 | X_1} (X_2 | X_1) = \frac{f_X (X)}{f_{X_1}(X_1)} = \frac{f_{X_1 | x_2}(X_1 | X_2) f_{X_2}(X_2)}{f_{X_1}(X_1)}

latex f_{X_2 | X_1} (X_2 | X_1) = \frac{f_X (X)}{f_{X_1}(X_1)} = \frac{f_{X_1 | x_2}(X_1 | X_2) f_{X_2}(X_2)}{f_{X_1}(X_1)}![]() latex F_{X_2 | X_1}(X_2 |X_1) = \int_{- \infty}^{x_{k+1}} \ldots \int_{- \infty}^{x_n} \frac{f_X (x_1, \ldots , x_k, u_{k+1}, \ldots , u_n)}{f_{X_1}(X_1)} du_{k+1} \dots du_n

latex F_{X_2 | X_1}(X_2 |X_1) = \int_{- \infty}^{x_{k+1}} \ldots \int_{- \infty}^{x_n} \frac{f_X (x_1, \ldots , x_k, u_{k+1}, \ldots , u_n)}{f_{X_1}(X_1)} du_{k+1} \dots du_n![]() latex f_{X_1}(\bullet)

latex f_{X_1}(\bullet)![]() latex X_1

latex X_1![]() latex f_{X_1} (x_1, \ldots , x_k) = \int_{- \infty}^{\infty} \ldots \int_{- \infty}^{\infty} f_X (x_1, \ldots , x_K u_{k+1}, \ldots , u_n) du_{k+1} \ldots du_n

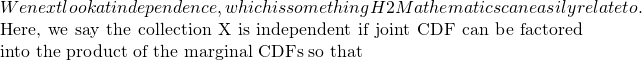

latex f_{X_1} (x_1, \ldots , x_k) = \int_{- \infty}^{\infty} \ldots \int_{- \infty}^{\infty} f_X (x_1, \ldots , x_K u_{k+1}, \ldots , u_n) du_{k+1} \ldots du_n latex F_X (x_1 \ldots , x_n) = F_{X_1} (x_1) \ldots F_{X_n}(x_n)

latex F_X (x_1 \ldots , x_n) = F_{X_1} (x_1) \ldots F_{X_n}(x_n)![]() latex f_X(\bullet)

latex f_X(\bullet)![]() Latex f_X(x) = f_{X_1} (x_1) \ldots f_{X_n}(x_n)

Latex f_X(x) = f_{X_1} (x_1) \ldots f_{X_n}(x_n)![]() latex X_1

latex X_1![]() latex X_2

latex X_2![]() latex f_{x_2|x_1}(x_2 | x_1) = \frac{f_X (X)}{f_{X_1}(X_1)} = \frac{f_{X_1}(X_1) f_{X_2}(X_2)}{f_{X_1}(X_1)} = f_{X_2}(X_2)

latex f_{x_2|x_1}(x_2 | x_1) = \frac{f_X (X)}{f_{X_1}(X_1)} = \frac{f_{X_1}(X_1) f_{X_2}(X_2)}{f_{X_1}(X_1)} = f_{X_2}(X_2)![]() latex X_1

latex X_1![]() latex X_2

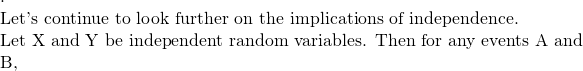

latex X_2 latex P(X \in A, Y \in B) = P(X \in A) P(Y \in B)

latex P(X \in A, Y \in B) = P(X \in A) P(Y \in B)![]() latex P(X \in A, Y \in B)latex = \mathbb{E}[1_{X \in A} 1_{Y \in B}]latex = P(X \in A) P(Y \in B)

latex P(X \in A, Y \in B)latex = \mathbb{E}[1_{X \in A} 1_{Y \in B}]latex = P(X \in A) P(Y \in B)![]() latex X_1, \ldots, X_n

latex X_1, \ldots, X_n![]() latex \mathbb{E}[f_1 (X_1) f_2(X_2) \ldots f_n(X_n)] = \mathbb{E}[f_1(X_1)] \mathbb{E}[f_2(X_2)] \ldots \mathbb{E}[f_n(X_n)]

latex \mathbb{E}[f_1 (X_1) f_2(X_2) \ldots f_n(X_n)] = \mathbb{E}[f_1(X_1)] \mathbb{E}[f_2(X_2)] \ldots \mathbb{E}[f_n(X_n)]![]() latex \mathbb{E}[f(X)g(Y)|Z] = \mathbb{E}[f(X)|Z]\mathbb{E}[g(Y)|Z]

latex \mathbb{E}[f(X)g(Y)|Z] = \mathbb{E}[f(X)|Z]\mathbb{E}[g(Y)|Z]![Rendered by QuickLaTeX.com . The above will be used in the Gaussian copula model for pricing of collateralised debt obligation (CDO). [caption id="attachment_2829" align="alignnone" width="300"]<a href="http://theculture.sg/wp-content/uploads/2016/01/620x346xMultivariate_Gaussian_Fixed-1024x572.png.pagespeed.ic_.Tx7wEea-aO.png" rel="attachment wp-att-2829"><img src="http://theculture.sg/wp-content/uploads/2016/01/620x346xMultivariate_Gaussian_Fixed-1024x572.png.pagespeed.ic_.Tx7wEea-aO-300x168.png" alt="Source: www.turingfinance.com" width="300" height="168" class="size-medium wp-image-2829" /></a> Source: www.turingfinance.com[/caption] We let](https://theculture.sg/wp-content/ql-cache/quicklatex.com-a4a13daab3b48bbccb019e409bc6e561_l3.png) latex D_i

latex D_i![]() latex i^{th}

latex i^{th}![]() latex D_i

latex D_i![]() latex P(D_1, \ldots, D_n |Z ) = P(D_1|Z) \ldots P(D_n|Z)

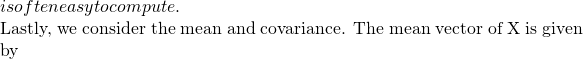

latex P(D_1, \ldots, D_n |Z ) = P(D_1|Z) \ldots P(D_n|Z) Latex \mathbb{E}[X]:=(\mathbb{E}[X_1] \ldots \mathbb{E}[X_n])^T

Latex \mathbb{E}[X]:=(\mathbb{E}[X_1] \ldots \mathbb{E}[X_n])^T![]() latex \sum := \mathrm{Cov}(X) := \mathbb{E}[(X- \mathbb{E}[X])(X – \mathbb{E}[X])^T]

latex \sum := \mathrm{Cov}(X) := \mathbb{E}[(X- \mathbb{E}[X])(X – \mathbb{E}[X])^T]![]() latex (i,j)^{th}

latex (i,j)^{th}![]() latex \sum

latex \sum![]() Latex X_i

Latex X_i![]() latex X_j

latex X_j![]() latex \sum_{i, i} \ge 0

latex \sum_{i, i} \ge 0![]() latex x^T \sum x \ge 0

latex x^T \sum x \ge 0![]() latex x \in \mathbb{R}^n

latex x \in \mathbb{R}^n![]() latex \rho (X)

latex \rho (X)![]() latex (i, j)^{th}

latex (i, j)^{th}![]() latex \rho_{ij} := \mathrm{Corr}(X_i, X_j)

latex \rho_{ij} := \mathrm{Corr}(X_i, X_j)![]() latex A \in \mathbb{R}^{k \times n}

latex A \in \mathbb{R}^{k \times n}![]() latex a \in \mathbb{R}^k

latex a \in \mathbb{R}^k![]() Latex \mathbb{E}[AX +a] = A \mathbb{E} [X] + a

Latex \mathbb{E}[AX +a] = A \mathbb{E} [X] + a![]() latex \mathrm{Cov}(AX +a) = A \mathrm{Cov}(X) A^Tlatex \Rightarrow \mathrm{Var} (aX + bY) = a^2 \mathrm{Var}(X) + b^2 \mathrm{Var}(Y) + 2ab \mathrm{Cov}(X, Y)

latex \mathrm{Cov}(AX +a) = A \mathrm{Cov}(X) A^Tlatex \Rightarrow \mathrm{Var} (aX + bY) = a^2 \mathrm{Var}(X) + b^2 \mathrm{Var}(Y) + 2ab \mathrm{Cov}(X, Y)![]() latex \mathrm{Cov}(X, Y)=0$, and the converse is not true in general.

latex \mathrm{Cov}(X, Y)=0$, and the converse is not true in general.

[…] Multivariate Distributions […]